YouTube’s ‘dislike’ and ‘not interested’ options don’t do much for your recommendations, study says

There is no secret that both viewers and creators are confused by the puzzle that is YouTube's recommendation algorithm. Now, a new study by Mozilla suggests that users' recommendations don't change a lot when they use options like "dislike" and "not interested" to stop YouTube from suggesting similar videos.

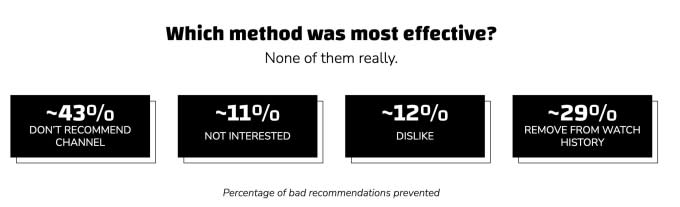

The organization's study observed that YouTube served them with videos similar to what they had rejected — despite people using feedback tools or changing their settings. When it came to tools preventing bad recommendations, clicking on "not interested" and "dislike" was mostly ineffective as it only prevented 11% and 12% of bad recommendations, respectively. Methods like "don't recommend channel" and "remove from watch history" ranked higher in effectiveness, cutting 43% and 29% of bad recommendations, respectively. Overall, users who participated in the study were dissatisfied with YouTube's ability to keep bad recommendations out of their feeds.

Image Credits: Mozilla

Mozilla's study took data from 22,722 users of its own RegretReporter browser extension — which lets users report "regrettable" videos and control their recommendations better — and analyzed more than 567 million videos. It further took a detailed survey with 2,757 RegretReporter users to better understand their feedback.

The report noted that 78.3% of participants used YouTube's own feedback buttons, changed the settings or avoided certain videos to "teach" the algorithm to suggest better stuff. Out of people who took any kind of steps to control YouTube's recommendation better, 39.3% said those steps didn't work.

“Nothing changed. Sometimes I would report things as misleading and spam and the next day it was back in. It almost feels like the more negative feedback I provide to their suggestions the higher bullshit mountain gets. Even when you block certain sources they eventually return,” a survey taker said.

Indeed, 23% of people who made an effort to change YouTube's suggestion gave a mixed response. They cited effects like unwanted videos creeping back into the feed or spending a lot of sustained time and effort to positively change recommendations.

"Yes they did change, but in a bad way. In a way, I feel punished for proactively trying to change the algorithm's behavior. In some ways, less interaction provides less data on which to base the recommendations,” another study participant said.

Mozilla concluded that even YouTube's most effective tools for staving off bad recommendations were not sufficient to change users' feeds. It said that the company "is not really that interested in hearing what its users really want, preferring to rely on opaque methods that drive engagement regardless of the best interests of its users."

The organization recommended YouTube to design easy-to-understand user controls and give researchers granular data access to better understand the video-sharing site's recommendation engine.

"We offer viewers control over their recommendations, including the ability to block a video or channel from being recommended to them in the future. Importantly, our controls do not filter out entire topics or viewpoints, as this could have negative effects for viewers, like creating echo chambers. We welcome academic research on our platform, which is why we recently expanded Data API access through our YouTube Researcher Program. Mozilla’s report doesn’t take into account how our systems actually work, and therefore it’s difficult for us to glean many insights," Elena a YouTube spokesperson said in response to the study.

Mozilla conducted another YouTube-based study last year that noted the service's algorithm suggested 71% of the videos users "regretted" watching, which included clips on misinformation and spam. A few months after this study was made public, YouTube wrote a blog post defending its decision to build the current recommendation system and filter out "low-quality" content.

After years of relying on algorithms to suggest more content to users, social networks including TikTok, Twitter and Instagram are trying to provide users with more options to refine their feeds.

Lawmakers across the world are also taking a closer look at how opaque recommendation engines of different social networks can affect users. The European Union passed a Digital Services Act in April to increase algorithmic accountability from platforms, while the U.S. is considering a bipartisan Filter Bubble Transparency Act to address a similar issue.

The story is updated with a response from Google.

Yahoo Finance

Yahoo Finance