Engadget has been testing and reviewing consumer tech since 2004. Our stories may include affiliate links; if you buy something through a link, we may earn a commission. Read more about how we evaluate products.

How social media platforms are handling the 2020 election

Labels are everywhere, but misinformation is still spreading.

Election night ended the way many experts predicted it might: with several races too close to call and President Donald Trump claiming victory anyway. For social media platforms, Election Day was also their chance to prove that tightening their policies in preparation for a contested election was time well spent. Here’s how the biggest social media apps have fared so far.

Twitter made it clear months ahead of the election that it was taking its role in preventing the spread of misinformation seriously. The company implemented a number of policy changes in the weeks ahead to prepare for the kinds of threats it may face.

Before Election Day, the company issued an early warning that it would label posts that tried to declare victory prematurely, and explained how it would determine when a result was “official.” But the real test came when Trump started tweeting. When the president tweeted in the early hours of Wednesday morning that “they are trying to STEAL the Election,” the company added a label within minutes. The label, which called the tweet “misleading,” prevented it from appearing directly on users’ timelines. The company also disabled retweets and likes. By Wednesday afternoon, the company had labeled five tweets from the president.

They are working hard to make up 500,000 vote advantage in Pennsylvania disappear — ASAP. Likewise, Michigan and others!

— Donald J. Trump (@realDonaldTrump) November 4, 2020

Twitter also labeled a number of other tweets from prominent Republicans and other influential accounts for breaking its rules. On Tuesday, the company added a label to a tweet from Trump’s campaign account, which said the president had won South Carolina before results in the state were final. It also labeled a tweet from Florida Governor Ron DeSantis, which said Trump had won Florida before the race had been called, and added a notice to a tweet from North Carolina Senate candidate Thom Tillis, who said he had won his race.

Florida voters have made their voices heard, delivering a BIG WIN for President @realDonaldTrump. pic.twitter.com/94gyYdbpLE

— Ron DeSantis (@RonDeSantisFL) November 4, 2020

Separately on Tuesday, the company shut down a handful of high-profile accounts “for breaking the company’s spam or hateful conduct policies,” NBC News reported. Banned accounts included a former Congressional candidate, who tweeted that “immigrants would enter the U.S. and commit violence if Trump is not elected.”

On Wednesday, the company had also labeled many more tweets, including messages from Eric Trump and White House Press Secretary Kayleigh McEnany, who both tweeted the president had won Pennsylvania.

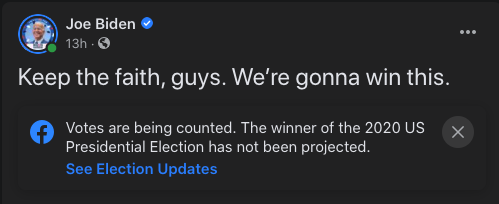

Like Twitter, Facebook had also warned early on that it would label posts that prematurely declared victory. The social network added labels to all of Trump’s election-related posts. It also labeled Joe Biden’s Facebook posts, though none of his posts explicitly declared victory.

“Once President Trump began making premature claims of victory, we started running notifications on Facebook and Instagram that votes are still being counted and a winner is not projected,” the company said in a statement early Wednesday. “We're also automatically applying labels to both candidates’ posts with this information.”

But Facebook also came under fire because its labels are not as clear as Twitter’s, which explicitly called the president’s comments misleading. Facebook also doesn't do anything to prevent these posts from spreading more widely. The company has throttled debunked conspiracy theories, like a claim that use of Sharpies may have affected vote counting. But Trump’s posts have continued to be shared widely. As of Wednesday morning, the president’s (now-labeled) comments were among the most-shared posts on the platform according to analytics data posted by CNN reporter Donie O’Sullivan.

We are continuing to label all posts from both presidential candidates making it clear that votes are still being counted and a winner has not been projected. We are also applying these labels to other individuals who declare premature victory in individual states or overall. pic.twitter.com/gmGdn4q52s

— Facebook Newsroom (@fbnewsroom) November 4, 2020

In another confusing turn, Facebook said late on election night its policy on labels would not apply to a candidate who prematurely declared victory in an individual state. The company told The Wall Street Journal the policy would only apply to “premature calls for the final result of the presidential race.” But on Wednesday, company officials said Facebook was “expanding” the rules to apply at the state level as well. The change came as the social network labeled a post from White House Press Secretary Kayleigh McEnany, who said Trump won Pennsylvania.

Instagram has used labels very similar to Facebook’s, and the app has been adding election messages to users’ posts for some time ahead of the election. But there have also been some hiccups, like a lingering Instagram notification on Tuesday that informed some users’ that “Tomorrow is Election Day,” which would seem to be at odds with the company’s policy barring misinformation about how to vote.

Once President Trump began making premature claims of victory, we started running notifications on Facebook and Instagram that votes are still being counted and a winner is not projected. We're also automatically applying labels to both candidates’ posts with this information. pic.twitter.com/tuGGLJkwcy

— Facebook Newsroom (@fbnewsroom) November 4, 2020

The company said the faulty notification was the result of a glitch that only affected a “small” number of users, though Instagram declined to say how many. By Wednesday morning, Instagram and Facebook put a new notice at the top of users' feeds warning that results are not yet final and ballots are still being counted.

YouTube

YouTube also opted to label all election-related videos with messages that results are not yet final, but as ArsTechnica pointed out, the company doesn’t explicitly bar candidates from prematurely declaring victory. Instead, the company’s guidelines address “misleading claims about voting or content that encourages interference in the democratic process.”

Researchers warned that live stream could emerge as a particularly problematic form of misinformation, as streamers can misrepresent election results or other events. That scenario ended up playing out on Tuesday, when a handful of popular channels began streaming “election results” before polls had even closed. The company eventually removed the streams, but some racked up thousands of viewers, and one became “one of Google’s most widely circulated videos in swing states,” according to researchers at the Election Integrity Partnership.

But on Wednesday, the company declined to remove a video from One American News Network titled “Trump won. MSM hopes you don’t believe your eyes.” In the video, which was first reported by CNBC, an anchor said there had been a “decisive victory” for Trump, and that voter fraud had been “rampant.” YouTube told CNBC that it wouldn’t take it down because the clip doesn’t violate its rules, even though it appeared to be at odds with the company’s previously-stated policies. The company did, however, pull ads from the video.

TikTok

The 2020 election was the first presidential election for TikTok, and the app seemed to avoid many of the bigger controversies of other platforms (likely because Trump is an avid critic of the company, and doesn’t have an official presence on the app). As with the other platforms, TikTok introduced labels for election-related content and linked to resources about voting and election information. The company also said it would work with fact checkers to “reduce discoverability” of videos that claim victory before a race was called by the Associated Press.

But misinformation has still been a concern on the platform, just as it has on other apps. A company spokesperson said TikTok is “continuing to remove content that violates our Community Guidelines,” and that “much of what we're removing mirrors content that's being posted across the internet.”

Though it’s not clear how widespread takedowns have been, the company did confirm to New York Times reporter Taylor Lorenz that it had removed misleading videos with claims of election fraud that were posted from some prominent accounts.

Updated to include YouTube’s response to a video from One American News Network.

Yahoo Finance

Yahoo Finance