How AI helped upscale an antique 1896 film to 4K

Trains! Now more realistic than ever!

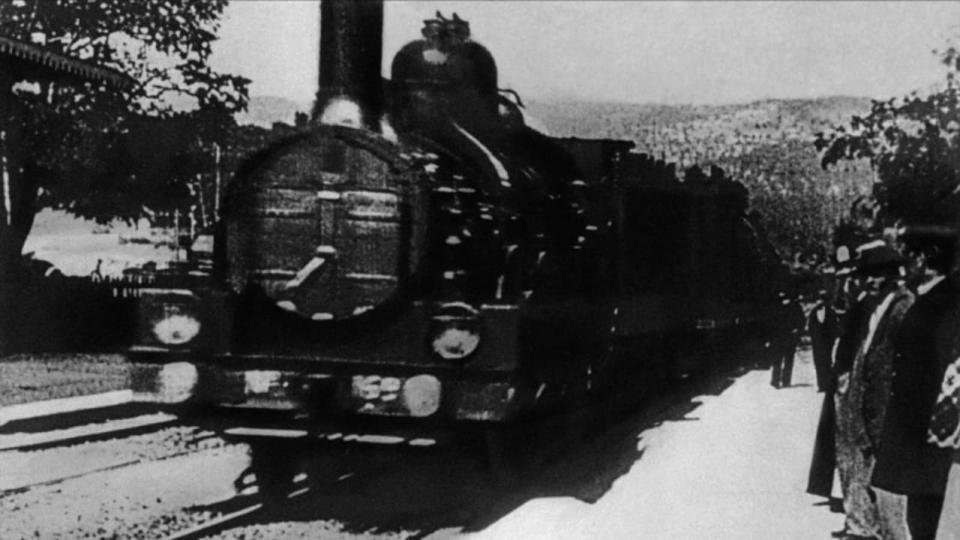

When the 50-second silent short film L'Arrivée d'un train en gare de La Ciotat premiered in 1896, some theatergoers reportedly ran for safety at the sight of a projected approaching train, thinking that a real one would burst through the screen at any moment, Looney Tunes-style. A wild thought, given the blurry, low-resolution quality of the original film. Thankfully those panicky cinephile pioneers never saw the AI-enhanced upscaled version released by Denis Shiryaev, or they would have absolutely flipped their lids.

Shiryaev leveraged a pair of publically available enhancement programs, DAIN and Topaz Labs' Gigapixel AI, to transform the original footage into a 4K 60FPS clip. Gigapixel AI uses a proprietary interpolation algorithm which "analyzes the image and recognizes details and structures and 'completes' the image" according to Topaz Labs' website. Effectively, Topaz taught an AI to accurately sharpen and clarify images even after they've been enlarged by as much as 600 percent. DAIN, on the other hand, imagines and inserts frames between the keyframes of an existing video clip. It's the same concept as the motion smoothing feature on 4K TVs that nobody but your parents use. In this case, however, it added enough frames to increase the rate to 60 FPS.

These are both examples of upscaling technology, which has been an essential part of broadcast entertainment since 1998 when the first high definition televisions hit the market. Old school standard definition televisions displayed at 720x480 resolution, a total of 345,600 pixels worth of content that can be shown at one time. High definition televisions display at 1920×1080, or 2,073,600 total pixels -- six times the resolution of SD -- while 4K sets, with their 3840x2160 resolution need 8,294,400 pixels.

You need to fill in an additional 6 million pixels to enlarge an HD image to fit on a 4K screen, so the upscaler has to figure out what to have those extra pixels display. This is where the interpolation process comes in. Interpolation estimates what each of those new pixels should display based on what the pixels around them are showing; however, there are a number of different ways in which to measure that.

The "nearest neighbor" method simply fills the blank pixels in with the same color as their nearest neighbor (hence the name). It's simple and effective but results in a jagged, overtly pixelated image. Bilinear interpolation requires a bit more processing power but it enables the TV to analyze each blank pixel based on its two nearest neighbors and generate a gradient between them, which sharpens the image. Bicubic interpolation, on the other hand, samples from its 16 nearest neighbors. This results in accurate coloring but a blurry image yet, by combining the results of bilinear and bicubic interpolation, TVs can account for each processes' shortcomings and generate upscaled images with minimal loss of optical quality (sharpness and the occasional artifact) compared to the original.

Since the interpolation process is essentially a guessing game, why not have an AI call the shots? Using deep convolutional neural networks, programs like DAIN can analyze and map video clips and then insert generated filler images between existing frames.

The effect isn't perfect by any means. Engadget's video producer Chris Schodt noted multiple visual artifacts upon close inspection including a rippling train motion and melding pedestrians. "In short, it looks great as a YouTube-sized piece," Schodt told Engadget. "But blow it up to full screen and I feel like the foreground objects and the interior of objects are pretty good, but if you look at the edges of things, or stuff in the backdrop, the seams come apart a bit."

Even with its current shortcomings, Shiryaev's technique offers some enticing opportunities. Could we soon see a silent film renaissance as their filmstocks are digitized and augmented by AI?

Yahoo Finance

Yahoo Finance